- TemplatesTemplates

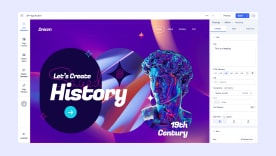

- Page BuilderPage Builder

- OverviewOverview

- FeaturesFeatures

- Dynamic ContentDynamic Content

- Popup BuilderPopup Builder

- InteractionsInteractions

- Layout BundlesLayout Bundles

- Pre-made BlocksPre-made Blocks

- DocumentationDocumentation

- EasyStoreEasyStore

- ResourcesResources

- DocumentationDocumentation

- ForumsForums

- Live ChatLive Chat

- Ask a QuestionAsk a QuestionGet fast & extensive assistance from our expert support engineers. Ask a question on our Forums, and we will get back to you.

- BlogBlog

- PricingPricing

Unwanted URLs Being Crawled Despite Disallow In Robots.txt

S

Sascha

Hello everyone,

I have a technical question regarding the crawling of specific URLs on my website.

The situation: The main URL of my website is, for example, https://homepage.de. However, during crawling, automatically generated URLs are also picked up, which I would like to exclude. One example of these unwanted URLs is: https://homepage.de/de/component/sppagebuilder/page/1.

What I’ve tried: To prevent these URLs from being crawled, I added the entry Disallow: /components/ in my robots.txt file. Despite this, these links continue to be crawled.

My question: Is there a reliable way to prevent these links from being crawled, even though they’re excluded in the robots.txt? Would any other methods or configurations be needed?

Thanks in advance for any help!

1 Answers

Order by

Oldest

Paul Frankowski

Accepted AnswerHI Sascha.

Indeed, as you noticed sometimes Google index links that we (webmasters) don't want to. I have similar problem but with different component, brrr. Why it happends, hard to say in all cases. I don't work in Google to be sure.

-

I would search in database (use PHPMyAdmin in cPanel) if you used in Page, Article or Module (even by mistake) that phrase "

component/sppagebuilder/page". If yes, correct link, update with short one. -

Then in Google Search Console > Removals > New Request > add one by one, add all links with long URL.

-

Check also sitemap - maybe that link was included already. If yes, remove , upload and then resend to Google for reindex.

-

Later wait 2-7 days for Google response.